Extract, Transform and Load (ETL) tools are an essential part of managing data in today’s world. With data coming from multiple sources and in different formats, ETL tools help collect, clean, transform and integrate data into a destination system for reporting and analysis. Choosing the right ETL tool is crucial to optimize workflows. This comprehensive guide will explain what ETL tools are, their benefits, types, working, pricing, evaluation criteria, top ETL software to consider and more.

What we cover

How can ETL Tools Help?

ETL stands for Extract, Transform, Load and refers to a process used to combine data from multiple sources into one destination system which can be used for data analysis and reporting.

ETL tools are software products that help automate this process of extracting data from source systems, transforming it to the required format, cleansing it, and loading it into a destination data warehouse or database.

Some key benefits of using ETL tools are:

- Automates the process of moving and preparing data for analysis. This saves significant time compared to manual data manipulation.

- Provides connectivity to diverse data sources such as databases, APIs, files, etc. and allows extracting data.

- Transforms data by applying business rules, aggregations, joins, filters, etc. to prepare it for analysis.

- Improves data quality by finding and fixing inconsistencies and errors.

- Integrates data from different systems into one data warehouse or database so it can be used together.

- Optimizes performance by handling large data volumes, applying parallel processing, compression, etc.

- Additional features like scheduling, monitoring, version control, etc. for managing ETL workflows.

In summary, ETL tools are critical for organizations to efficiently aggregate data from diverse sources and deliver analytics-ready, integrated data.

What are the different types of ETL tools?

There are a few major categories of ETL tools:

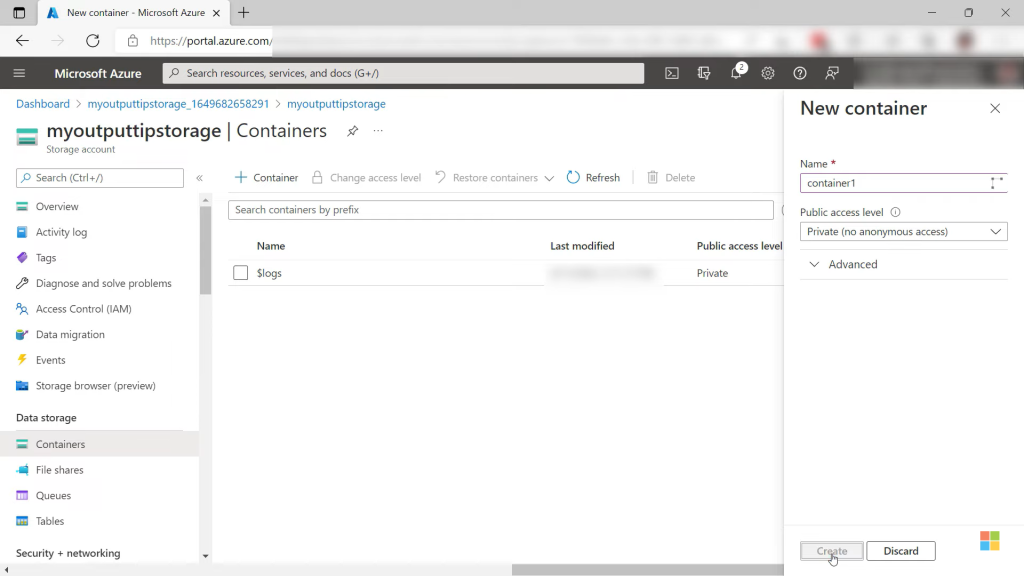

- Cloud Based ETL Tools: These tools are hosted in the cloud and provide ETL capabilities through cloud services. Some examples include AWS Glue, Azure Data Factory, Google Data Fusion, etc. Key advantages are no infrastructure requirements, faster deployment, scalability and availability. Pricing is pay-as-you-go based on usage. Ideal for organizations using cloud infrastructure.

- Open Source ETL Tools: These are open source ETL tools that can be downloaded and installed on-premises. Examples are Pentaho Data Integration, Talend Open Studio, Apache Airflow, etc. Benefits are no license cost and community-driven development. However, they require in-house skills for customization and support. Suitable for smaller budgets but need technical resources.

- Commercial ETL Tools: These are proprietary, paid ETL tools from vendors such as Informatica, IBM, SAS, etc. They provide extensive features and support services. Ideal for larger enterprises that need advanced functionality. But they are expensive and require long-term commitments.

How do ETL tools work?

ETL tools work by automating the key steps involved in data extraction, transformation and loading:

- Extract: The tool connects to various source systems via native connectors. It extracts the required data using SQL or other queries. Sources may include databases, APIs, files, etc.

- Transform: Once data is extracted, transformations are applied such as joins, aggregations, filtering, deduplication, encryption, etc. This prepares the data for analysis.

- Load: The processed data is then loaded into the target database or data warehouse. This may involve tasks like defining schemas, managing indexes and partitions.

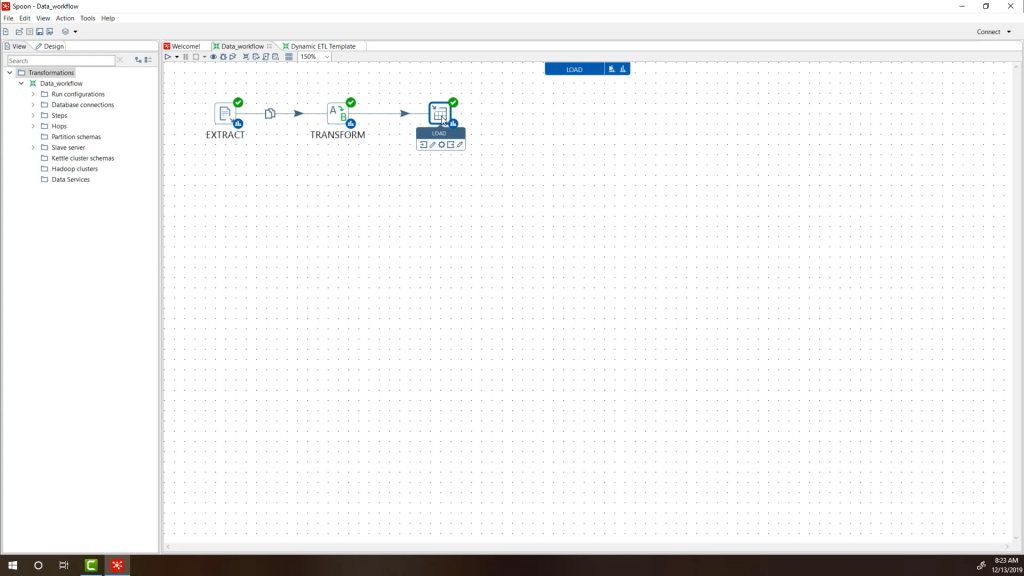

ETL tools provide a graphical interface to design ETL flows via drag-and-drop. Common components are:

- Sources & destinations to read/write data

- Transformations to manipulate data

- Workflow management, scheduling, monitoring etc.

Developers can also write custom code in languages like Python, Java, etc. for specific logic.

With robust ETL tools, organizations can build and manage complex ETL pipelines to efficiently move data between diverse systems. They are widely used in data warehousing, data lakes and other analytics initiatives.

How much do they cost?

ETL tools can range from completely free open source tools to expensive commercial products costing hundreds of thousands of dollars. Here is a overview of ETL tool pricing:

- Free Open Source ETL Tools: These include solutions like Pentaho Data Integration CE, Talend Open Studio, etc. which have free versions. Only limitation is lack of enterprise-grade support.

- Freemium ETL Tools: Some cloud ETL tools like Hevo, Fivetran, etc. have free plans for small volumes. Paid plans unlock more features. Great for startups and smaller teams.

- Commercial Cloud ETL Tools: Azure Data Factory, AWS Glue, etc. charge based on compute resources used. Can cost anywhere from a few hundred dollars to thousands per month.

- On-premise ETL Tools: Paid tools like Informatica, IBM DataStage can cost tens of thousands of dollars for licenses. Additional charges for updates, support, etc.

- Consulting & Managed ETL Services – Optional services for custom ETL development, managed deployments of ETL tools, etc. charged on effort basis.

Exact pricing depends on various factors like data volumes, complexity of use case, level of support needed, cloud vs on-premise deployment etc. Overall ETL tools provide a range of options suiting varying budgets.

How to evaluate and choose the best ETL tool?

Here are some important criteria to evaluate when selecting an ETL tool:

- Data source connectivity: Ability to connect to required data sources and APIs. Cloud tools usually have more connectors.

- Data transformation capabilities: Assess if tool provides necessary transformations like aggregations, joins, filtering required for use case.

- Scalability: Tool must handle data volumes as they grow over time by using techniques like parallelism.

- Data quality: Verify data profiling, cleansing, duplicate removal features meet needs.

- Workflow orchestration – Tool should provide workflow management, scheduling, monitoring, alerts etc.

- Ease of use: Evaluate if non-technical users can leverage tool via visual design, templates, etc.

- Security: Review encryption, access control, data masking etc. to ensure compliance.

- Support & training: Factor in quality of vendor support. Also training costs for adoption.

- Pricing & total cost of ownership: Understand all direct and indirect costs over lifetime.

- Product roadmap & future direction: Ensure vendor invests in enhancing product with market needs.

It is also important to do POCs, seek peer reviews and analyze alternatives thoroughly instead of just relying on vendor claims or marketing literature. This rigorous evaluation helps avoid costly tools that underperform.

Best ETL Tools

Best ETL Tools – At a Glance

| Criteria | Integrat.io | Oracle Data Integrator | Stitch | Talend | IBM DataStage | AWS Glue | SAS Data Management | Hevo | Pentaho | Azure Data Factory |

| Best For | SMBs | Enterprises | SaaS Data | Broad Use Cases | Complex Pipelines | AWS Ecosystem | Analytics | SaaS Data | Open Source | Microsoft Ecosystem |

| Ease of Use | High | Moderate | High | Moderate | Low | Moderate | Low | High | Moderate | High |

| Price | Freemium | High | Freemium | Moderate | High | Moderate | High | Freemium | Free | Moderate |

| Ratings | 4.6/5 | 4.2/5 | 4.7/5 | 4.3/5 | 4.0/5 | 4.2/5 | 4.1/5 | 4.8/5 | 4.1/5 | 4.4/5 |

Best ETL Tools – Let’s Dive Deeper

1. Integrat.io

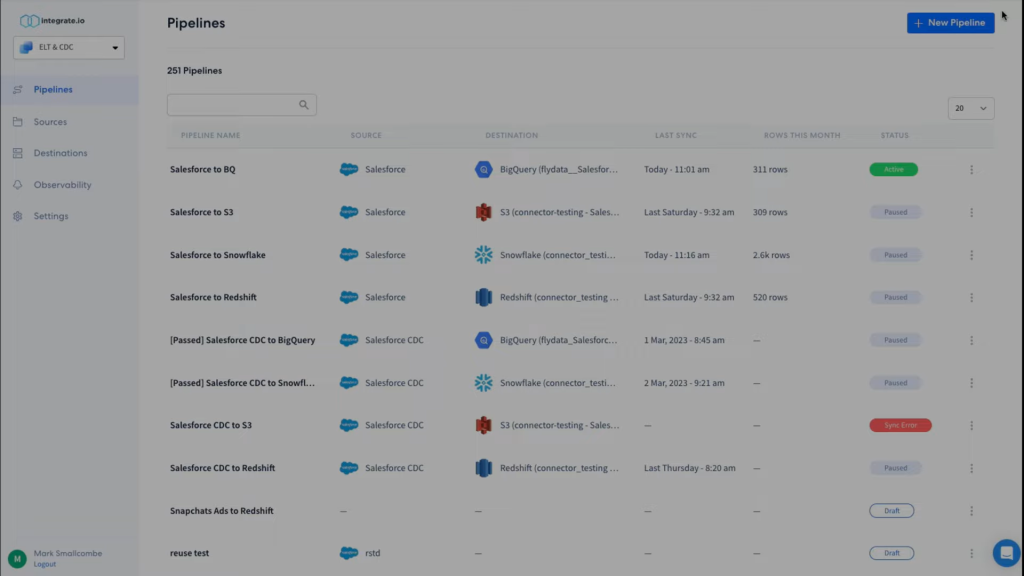

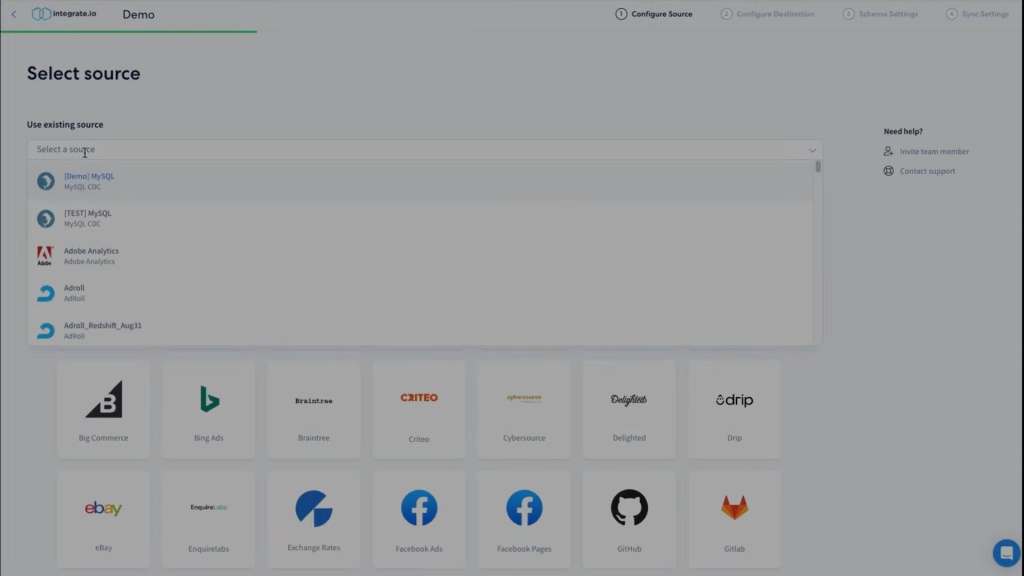

Integrat.io is a cloud-based iPaaS and ETL tool best suited for small and midsize businesses. It makes it easy to move data between various APIs, databases, cloud apps and files.

- Key Features: 500+ app integrations, intuitive UI, real-time data sync, monitoring, collaboration

- Price: Free for 1 user. $99 to $499 per month for more.

- Reviews & Ratings: 4.6/5 (G2Crowd)

- Pros: Affordable, easy to use, great support

- Cons: Can’t handle very complex pipelines

Integrat.io is designed to empower non-technical users to integrate their business apps and automate workflows. It provides an intuitive drag-and-drop interface to visually map out integrations between different endpoints. Users can simply select data sources and destinations, map fields and define transformations with just a few clicks.

The pre-built connectors make it easy to link web services, CRMs, databases, files and more. Developing an integration is as simple as connecting apps, testing and deploying. Monitoring tools allow tracking integration logs and errors in real-time. Collaboration features like shareable links and team workspaces streamline working with others.

While Integrat.io may not handle highly complex ETL pipelines, it excels at small to medium complexity use cases. The affordable pricing, ease of use and great support make it a great choice for growing teams.

Key Features

- 500+ pre-built integrations with web apps, databases

- Drag-and-drop interface for visually building flows

- Scheduled and real-time sync between data sources

- Monitoring dashboards for tracking integration status

- Team collaboration with shareable links and workspaces

Why You Should Consider

Integrat.io is an ideal choice for small teams that want an easy, affordable ETL and integration platform. The visually intuitive interface allows non-technical users to rapidly automate workflows and keep data in sync across numerous apps and databases.

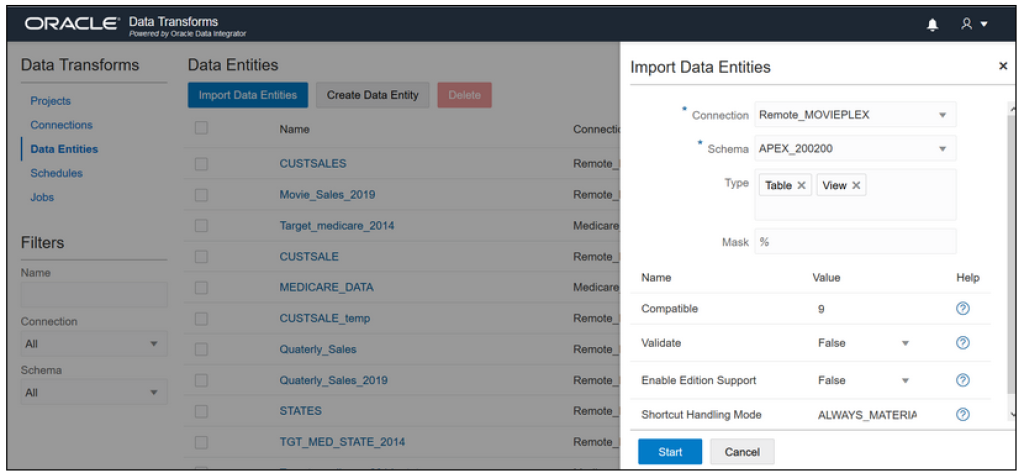

2. Oracle Data Integrator

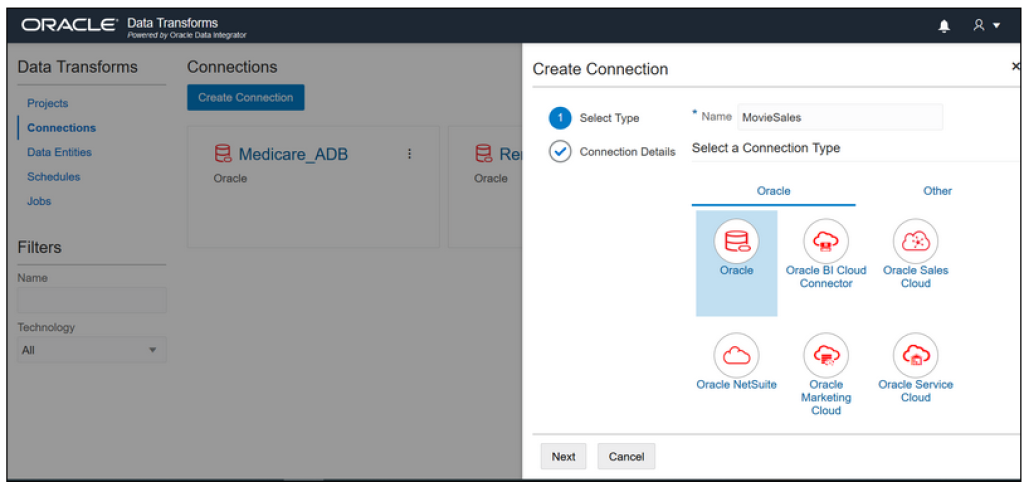

Oracle Data Integrator (ODI) is an on-premise ETL tool focused on high-performance batch data movement for complex environments.

- Key Features: Parallel mapping, data quality, metadata management, resilience

- Price: By quote. $30,000+ for licenses typically.

- Reviews & Ratings: 4.2/5 (TrustRadius)

- Pros: Scalability, resilience, suits complex pipelines

- Cons: Steep learning curve, on-premise only, expensive

ODI provides high-performance ETL capabilities utilizing optimizations like load balancing, native change data capture and parallel mapping. It features robust data quality functions and metadata management. The tool can integrate with diverse data ecosystems.

Oracle Data Integrator is built ground-up to deliver resilient, high-performance ETL for enterprise deployments. It leverages proprietary mechanisms like Multi-Connection Sessions and Load Plan Parallelism to enable massively parallel data flows. Pushdown optimization utilizes source-specific capabilities for further performance gains.

ODI has extensive built-in data quality profiling, cleansing, matching and monitoring. It maintains a centralized repository storing metadata, models, projects and other artifacts. The metadata-driven approach boosts developer productivity. Language transparency allows switching between coding languages without rework.

While the tool lacks an intuitive visual interface, it makes up with the ability to handle large scale, mission-critical ETL pipelines. The high cost may deter smaller customers, but large enterprises often choose ODI for its robustness.

Key Features

- Parallel mapping architecture for high throughput

- Centralized metadata repository for managing artifacts

- Comprehensive data quality and governance

- Scalability through load balancing, partitioning

- High availability and fault tolerance

Why You Should Consider

Oracle Data Integrator is a proven, resilient ETL tool suitable for the most demanding enterprise environments processing big data volumes.

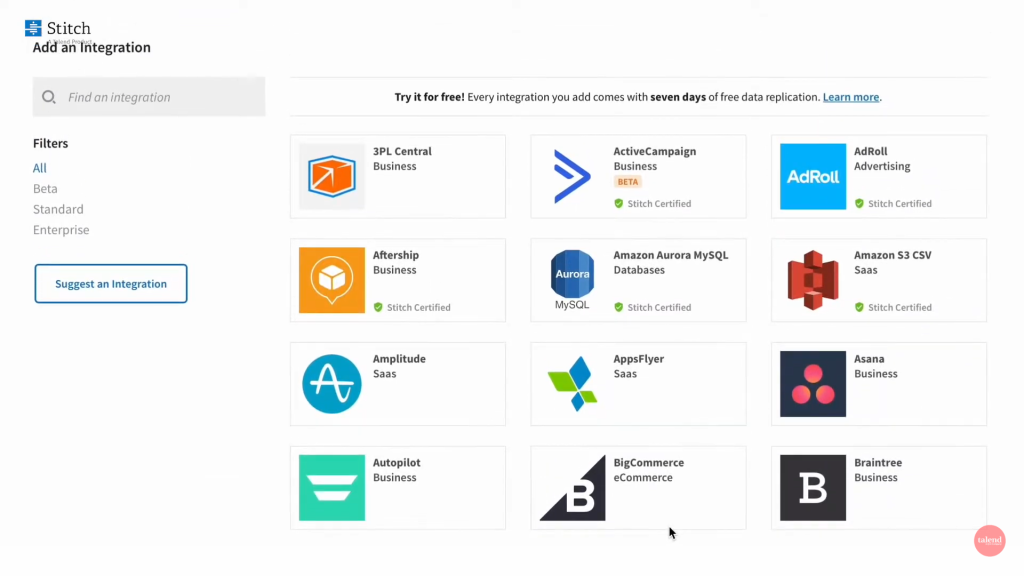

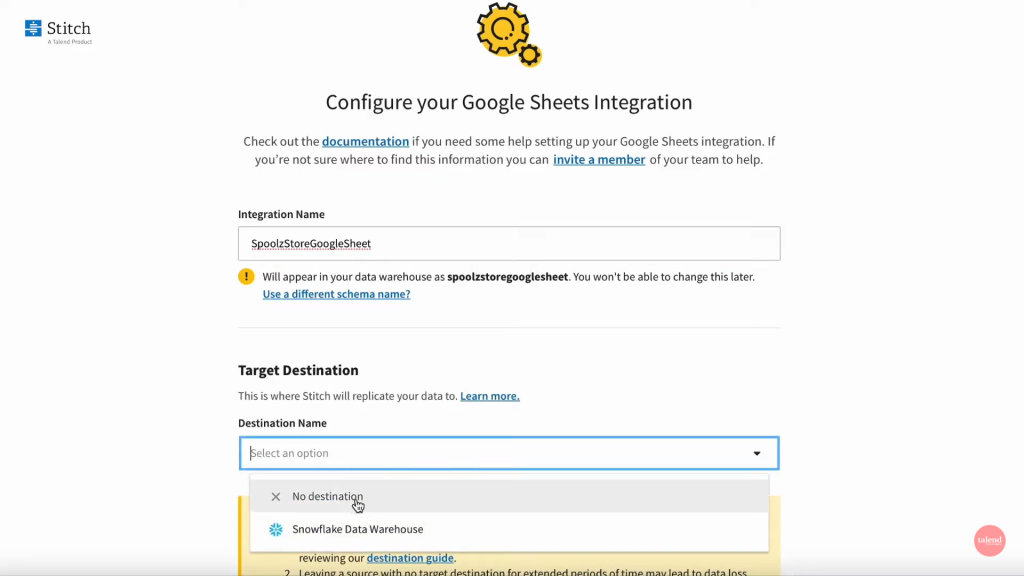

3. Stitch

Stitch is a cloud-based ETL service designed for rapidly moving data from SaaS apps into data warehouses and databases.

- Key Features: Pre-built integrations, simple UI, data monitoring, scalability

- Price: $100 – $1,000/month based on data volume

- Reviews & Ratings: 4.7/5 (G2 Crowd)

- Pros: Easy to use, great for SaaS data sources, reliable

- Cons: Limited transformation capabilities

Stitch makes it easy to extract data from cloud-based apps like Salesforce, Marketo, Slack, etc. and load it into cloud data warehouses and databases. It handles scheduling, transformations, scaling and monitoring under the hood.

A key strength of Stitch is its library of 70+ pre-built integrations with SaaS apps and databases. Users simply select connections, map fields and configure the frequency of data extracts. Built-in data monitoring provides alerts on errors or missing data.

Stitch automatically handles underlying tasks like scheduling, batching, encoding, compression etc. to optimize throughput. The service can scale to accommodate high data volumes as needs grow.

The tool focuses primarily on data movement rather than extensive transformations. So optimal for moving high volumes of SaaS data to cloud data warehouses. The simple UI and automation makes Stitch easy to adopt without deep technical skills.

Key Features

- 70+ pre-built integrations with SaaS apps, databases

- Intuitive UI to set up and monitor data pipelines

- Advanced scaling, scheduling, error handling mechanisms

- Data monitoring with alerts on problems

- Straightforward pricing based on volume

Why You Should Consider

Stitch offers a convenient way to get data from popular SaaS applications into data warehouses. The automation and ease of use accelerates analytics.

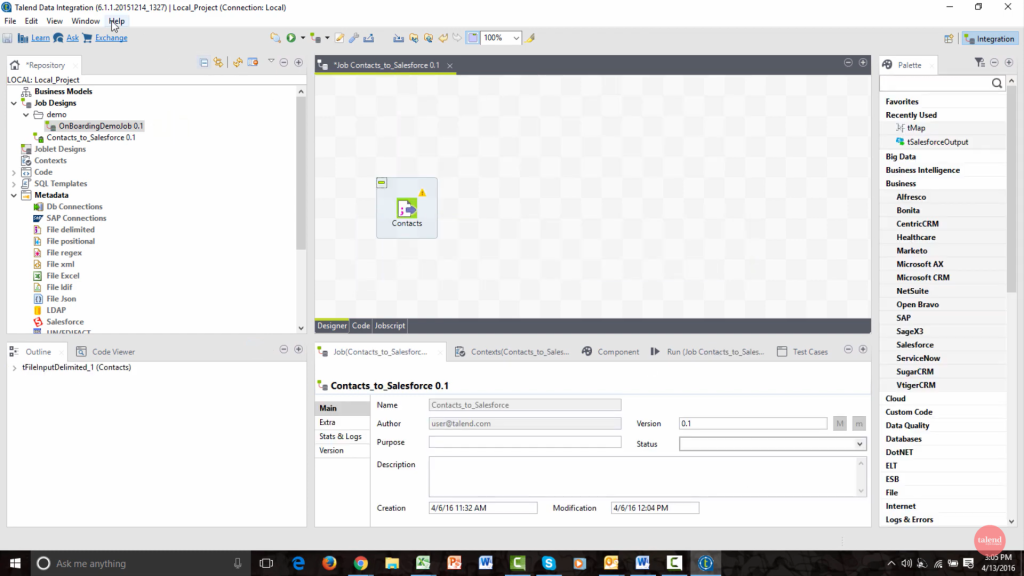

4. Talend

Talend offers a unified platform for data integration, integrity and governance across cloud and on-premise environments.

- Key Features: Broad connectivity, data quality, metadata management, catalog

- Price: Subscription starts ~$2,000/month

- Reviews & Ratings: 4.3/5 (G2 Crowd)

- Pros: Unified platform, strong data governance

- Cons: Steep learning curve, expensive

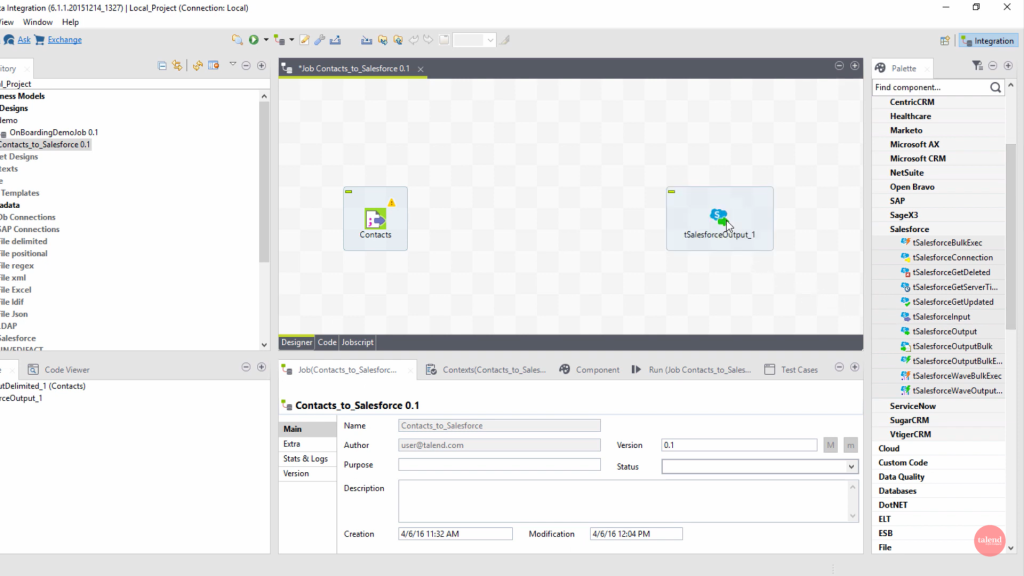

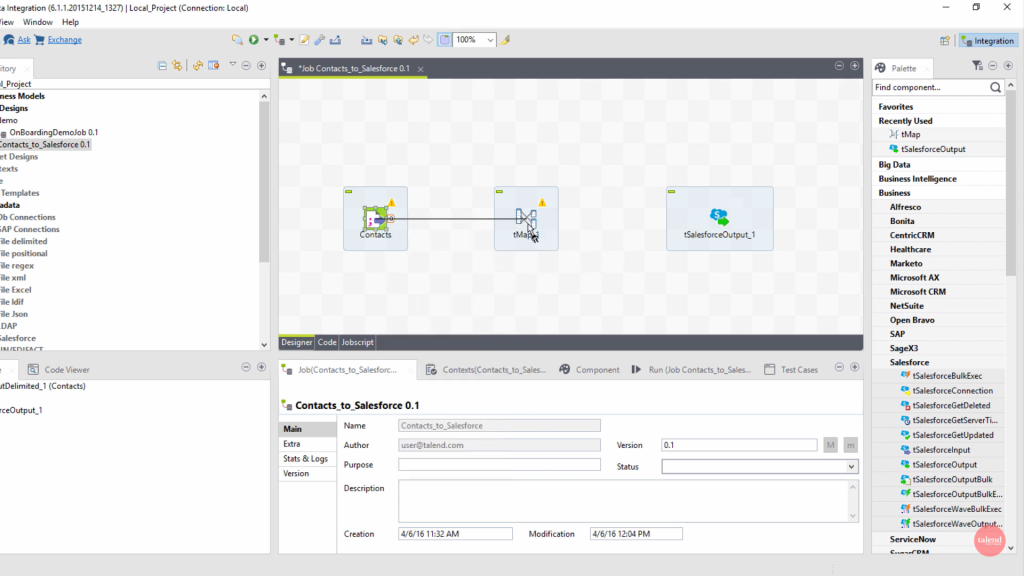

Talend handles advanced data transformations, data lake ingestion, data quality and metadata management in one platform. The hybrid integration capabilities bridge cloud and on-premise systems.

The Talend platform provides components to build complex end-to-end pipelines – from accessing diverse data sources to transforming, enriching, cataloging and distributing quality data across the organization. Hundreds of pre-built connectors minimize development effort.

The data quality tools allow discovery, standardization, cleansing, matching and monitoring to ensure trustworthy information. Robust metadata management maintains technical, business and operational metadata in a central catalog.

Natively optimized connectors for AWS, Azure and GCP allow mixing services across cloud and on-premise infrastructure. The unified log and monitoring provides observability across all integration flows.

Talend requires more technical expertise than simple ETL tools. But offers an enterprise-grade, scalable platform for managing the complete data integration lifecycle.

Key Features

- Unified platform across on-premise, multi-cloud data

- Broad connectivity to databases, files, apps, APIs

- Automated data quality and governance

- Management of technical, business and operational metadata

- Centralized logging and monitoring

Why You Should Consider

Talend provides a holistic, enterprise-ready data integration platform equally adept at large scale batch or real-time use cases across hybrid infrastructure.

5. IBM DataStage

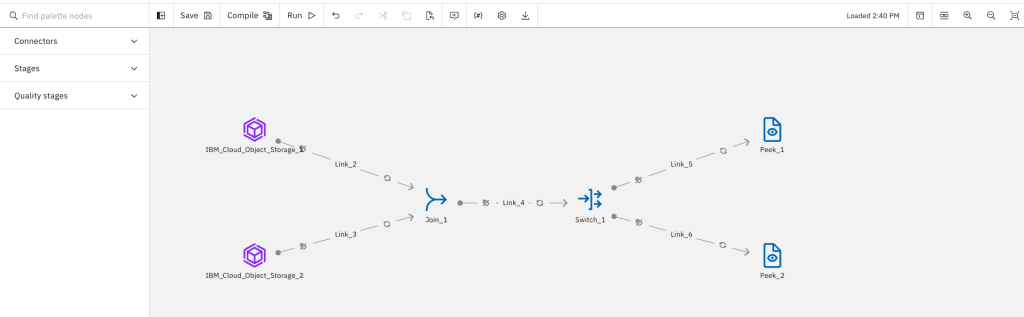

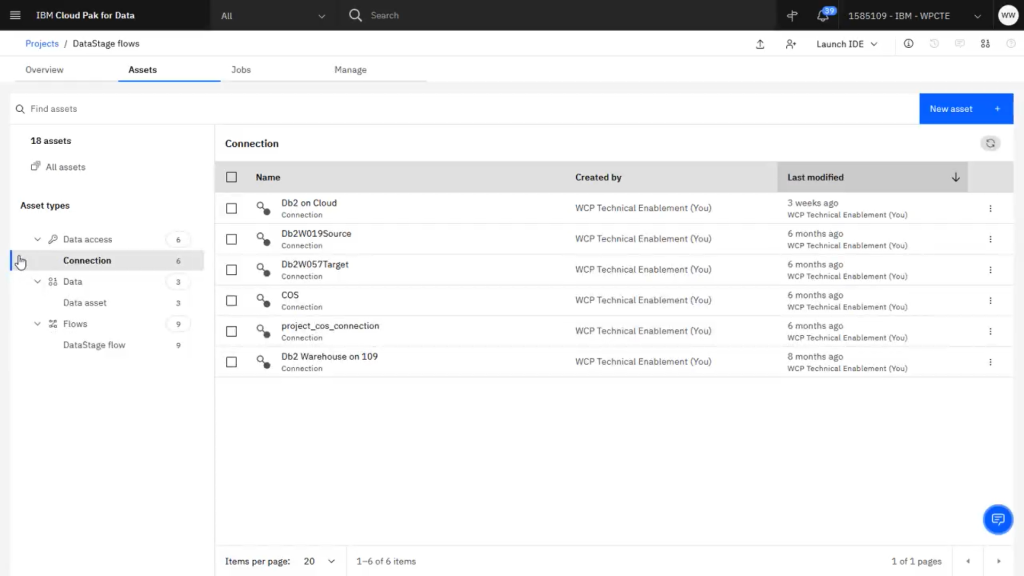

IBM DataStage is an enterprise-grade ETL and data integration tool that excels at handling complex data pipelines.

- Key Features: Visual design, native Big Data connectors, scalable architecture, data quality

- Price: By quote, licenses start ~$35,000

- Reviews & Ratings: 4.0/5 (TrustRadius)

- Pros: Handles complex workflows, integrates diverse data, robust architecture

- Cons: Steep learning curve, expensive, on-premise only

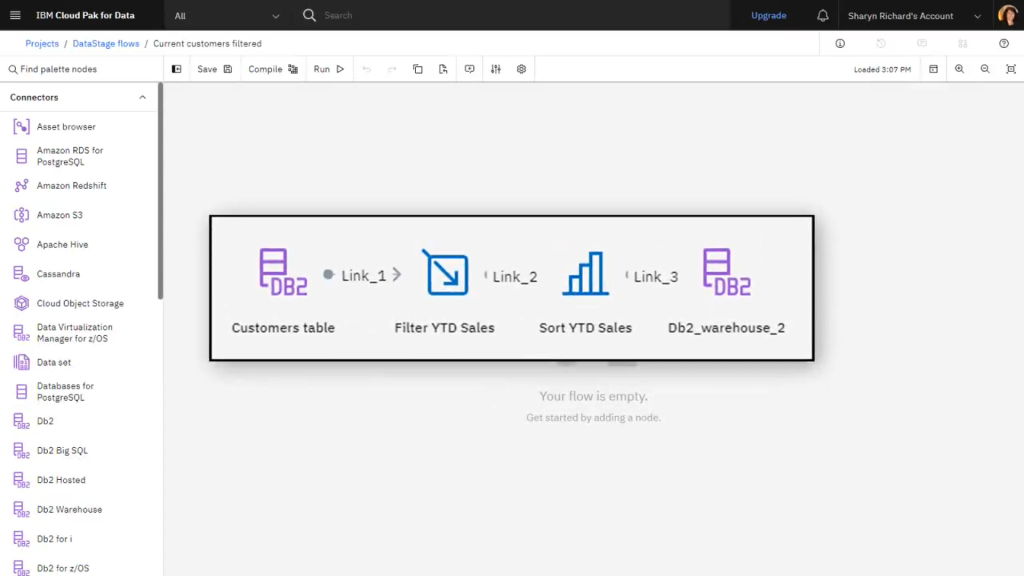

IBM DataStage provides extensive capabilities to build, debug, run and monitor ETL processes that ingest data from a wide array of sources into target databases or Hadoop. The visual interface allows constructing ETL workflows with drag-and-drop components and underlying code generation.

Native Big Data connectors provide optimized data access for platforms like Hadoop, Cassandra, MongoDB etc. The highly scalable data processing engine leverages techniques like partitioning, parallelism and workload balancing to speed up pipelines. Integrated data quality and governance features improve reliability of delivery pipelines.

While the tool lacks simplicity, its ability to handle intricate workflows integrating real-time and batch data at scale makes it a staple in large enterprises. The high cost and on-premise implementation also targets bigger deployments.

Key Features

- Graphical design, debugging and monitoring of ETL processes

- Connectors for diverse data sources – RDBMS, NoSQL, Hadoop, cloud

- Scalable architecture using partitioning, parallelism

- Integrated data profiling, cleansing, governance

- Metadata management across data pipeline

Why You Should Consider

IBM DataStage excels at mission-critical data integration in complex environments due to its robust architecture, connectors and enterprise-grade capabilities.

6. AWS Glue

AWS Glue is a fully managed ETL service offered as part of the AWS cloud ecosystem for preparing and loading data.

- Key Features: Managed service, data catalog, automatic schema detection, serverless ETL

- Price: Pay per minute based on compute used

- Reviews & Ratings: 4.2/5 (G2 Crowd)

- Pros: Fully managed, scales automatically, integrates with AWS services

- Cons: Proprietary data formats, less flexibility compared to open source ETL tools

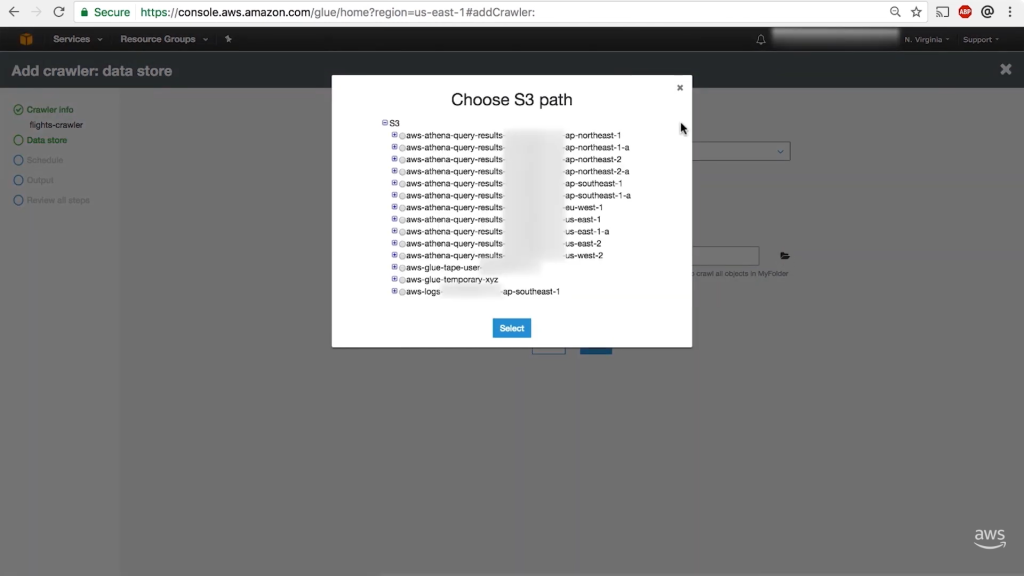

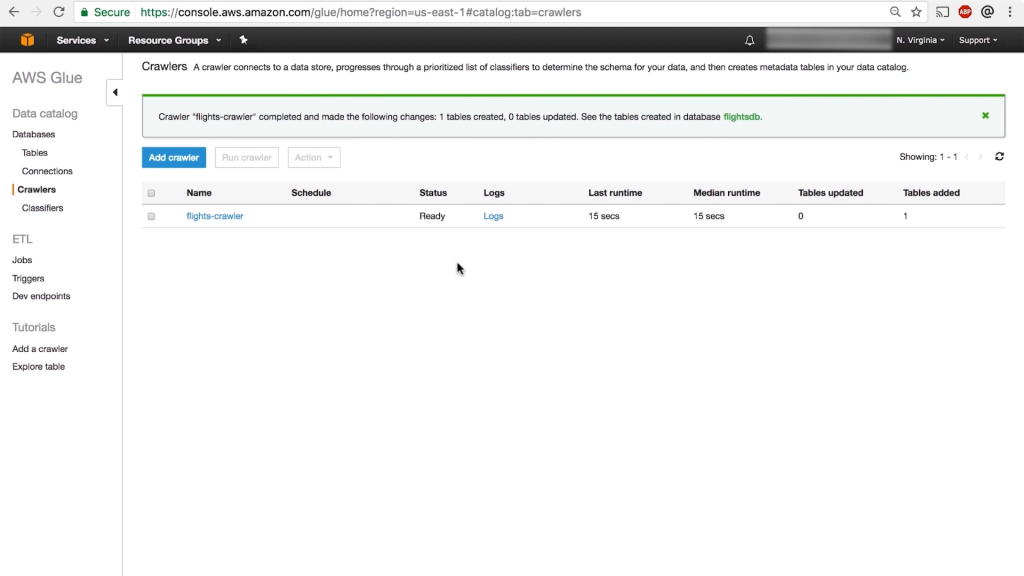

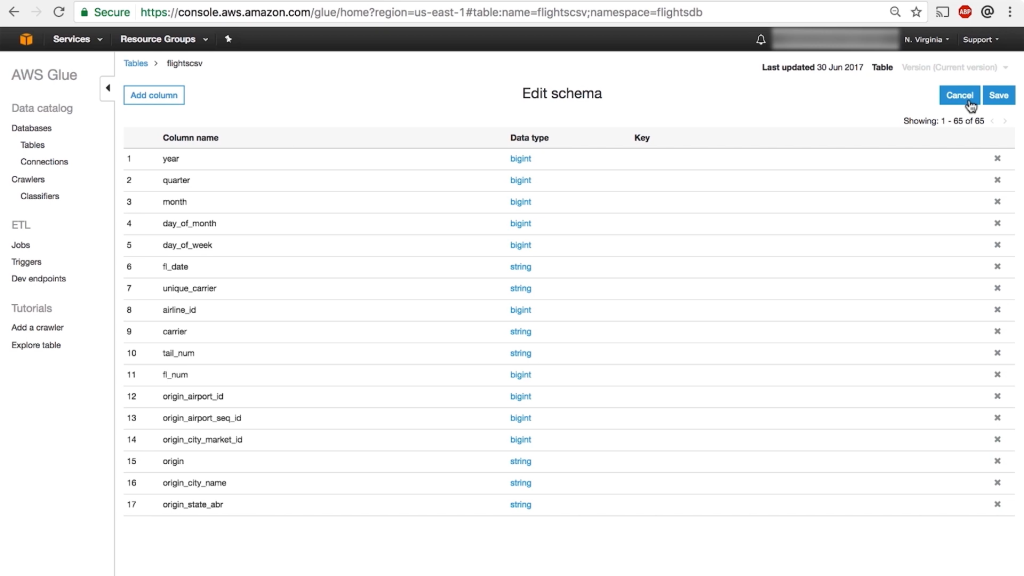

AWS Glue provides a serverless ETL platform to prepare and transform data for analytics. Since it is a fully managed service, it eliminates infrastructure management tasks. Users only need to point Glue to data sources and tune extract and transform jobs.

Key capabilities include automatic schema detection and cataloging, ETL code generation, in-build data quality checks and diverse connectivity options to access AWS data stores. It natively integrates with AWS services like S3, Redshift, RDS, etc.

Glue can automatically scale out ETL jobs to handle large volumes by provisioning more compute resources. The pay-per-use pricing allows optimizing costs. The proprietary formats limit portability of workflows. Overall, Glue offers a convenient option to leverage ETL as part of the AWS ecosystem.

Key Features

- Fully managed ETL service without servers

- Data catalog for discovering, exploring and managing metadata

- Automatic generation of PySpark or Scala code for ETL jobs

- Managed infrastructure for scaling ETL workflows

- Integration with AWS data stores – S3, RDS, Redshift etc.

Why You Should Consider

AWS Glue is the easiest way to orchestrate ETL jobs by taking advantage of AWS services, storage and compute – great for cloud-centric data.

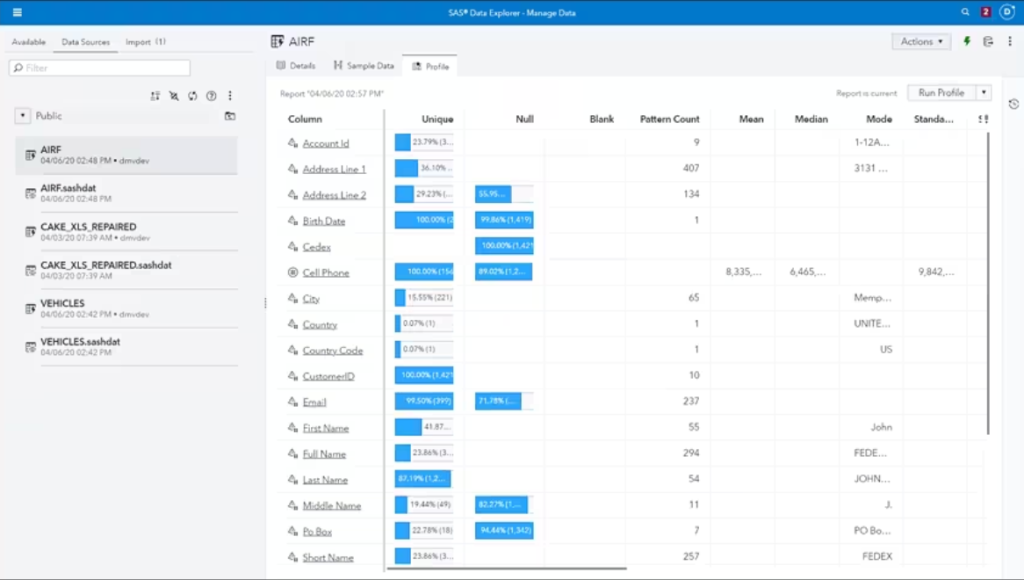

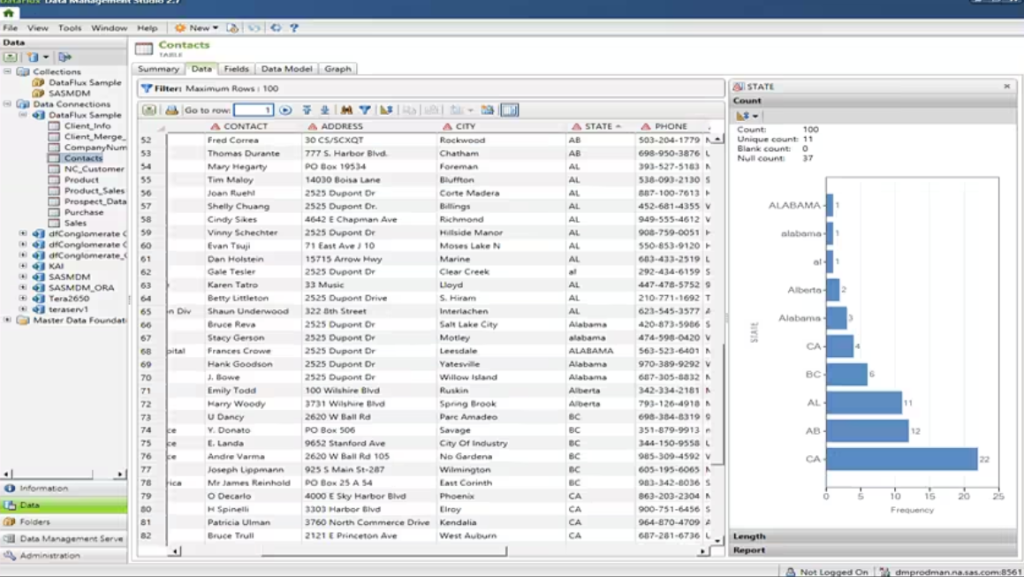

7. SAS Data Management

SAS Data Management provides data integration, data quality, data governance and Master Data Management capability leveraging SAS technology.

- Key Features: data quality, data integration, metadata management, business rules automation

- Price: By Quote, ~$25,000 per core subscription

- Reviews & Ratings: 4.1/5 (TrustRadius)

- Pros: Mature and comprehensive functionality around data

- Cons: Very complex, expensive, legacy technology

SAS has decades of experience in data management which is reflected in the rich features around data integration, data quality, governance and master data management offered by their platform. The centralized metadata repository helps enforce standards and compliance. Users can leverage hundreds of built-in data quality checks or define custom rules and metrics.

The data integration components help consolidate data from disparate sources into consistent, accurate information. Advanced data mapping, enrichment, filtering, joining etc. can be applied to handle diverse data. SAS leverages machine learning to recommend transformations and entity matching models.

While SAS provides vast data management capabilities, the complexity can be overwhelming for smaller teams. The on-premise implementation and high cost also limit its adoption mostly to existing SAS customers in large enterprises.

Key Features

- Data integration for combining, transforming and delivering data

- Data quality tools for profiling, cleansing monitoring data

- Master data management for managing business entities

- Metadata repository for managing technical, business metadata

- Automated recommendation of data transformations via AI/ML

Why You Should Consider

SAS Data Management offers complete data preparation and governance features leveraging SAS’s decades of experience – great for regulated industries.

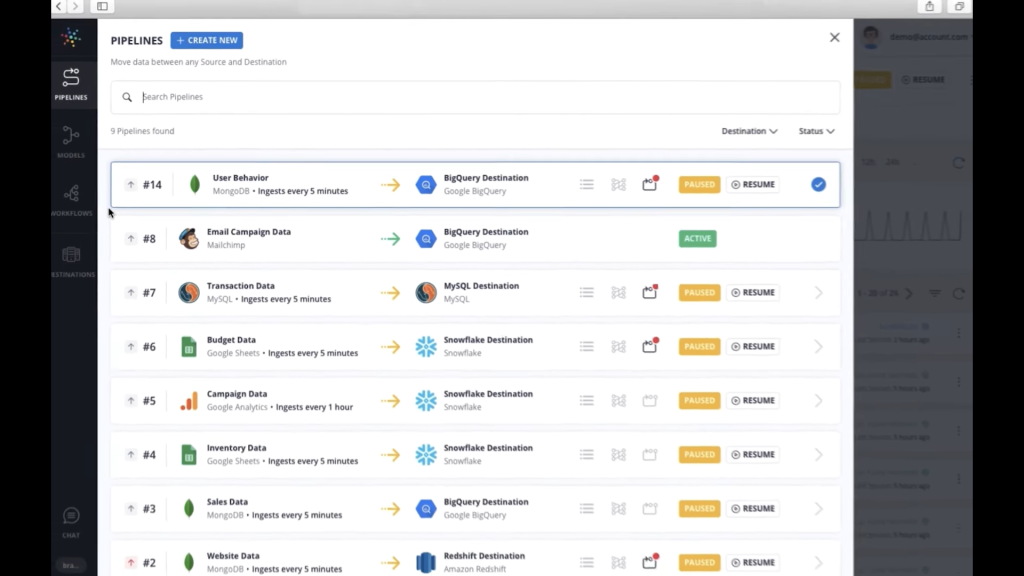

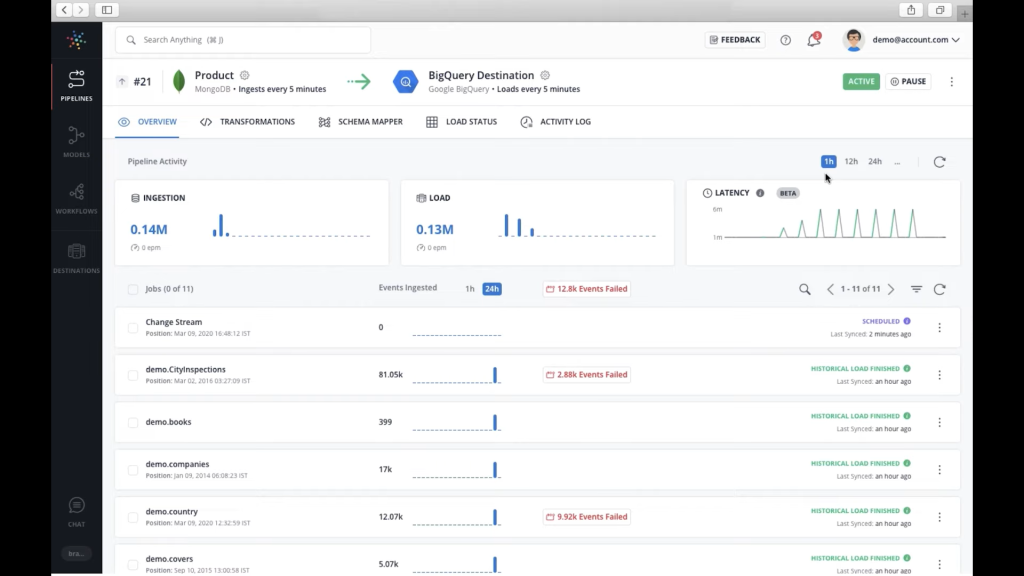

8.Hevo Data

Hevo Data offers an automated, no-code ETL pipeline service with pre-built integrations to SaaS applications and data warehouses.

- Key Features: Web app, real-time data sync,transformation library, scheduling, monitoring

- Price: Free 21 day trial. $999/month base.

- Reviews & Ratings: 4.8/5 (G2 Crowd)

- Pros: Intuitive, fast, reliable, great support

- Cons: Can’t handle complex orchestration and custom code

Hevo provides a straightforward, code-free way to sync data from SaaS applications like Salesforce, Marketo, Google Ads etc. into cloud data warehouses like Snowflake, BigQuery, Amazon Redshift. It offers pre-built connectors and transformations that can be configured visually to build pipelines.

Hevo handles underlying tasks like scheduling, batching, retries, monitoring etc. once pipelines are set up. The no-code approach enables non-technical users to rapidly automate data integration workflows and combine data for analysis without engineering effort.

While Hevo lacks complex orchestration and custom code options seen in developer-focused ETL tools, it provides the simplicity needed for common SaaS data integration use cases. The excellent customer support and reliability has earned Hevo a loyal customer base.

Key Features

- 60+ pre-built data source integrations

- Drag-and-drop UI for building pipelines

- Library of 450+ transformation functions

- Real-time sync or scheduled ETL

- Advanced monitoring and alerting

Why You Should Consider

Hevo Data offers a fast, reliable way to sync data from SaaS apps to cloud warehouses for analytics using its library of pre-built elements.

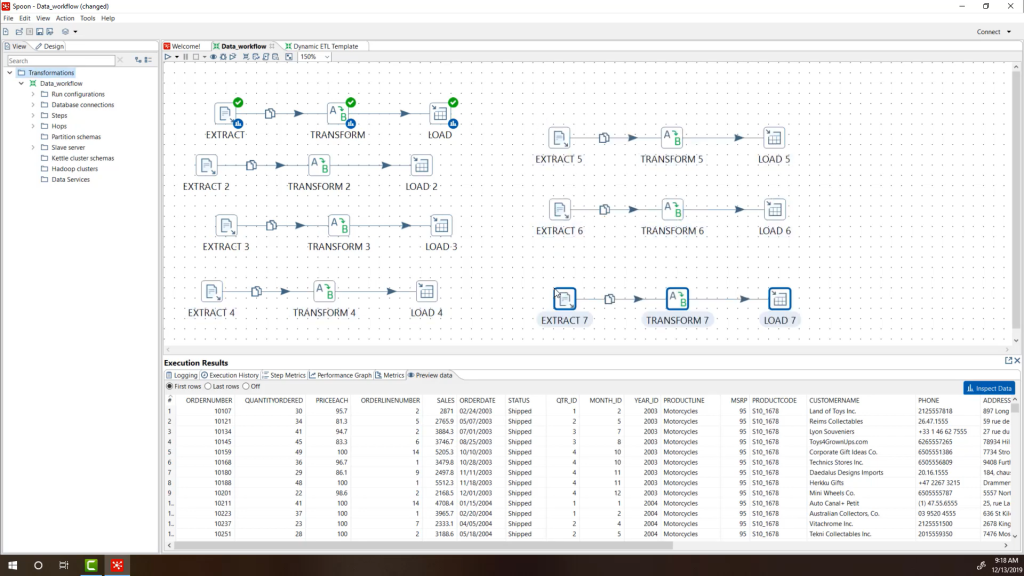

9.Pentaho

Pentaho provides comprehensive data integration and analytics capabilities in a unified, open source platform.

- Key Features: Broad data connectivity, ETL engine, data visualization, workflow engine, community edition

- Price: Community edition free. Enterprise subscription $27,000 per year

- Reviews & Ratings: 4.1/5 (SourceForge)

- Pros: Mature ETL features, highly extensible via plugins, vibrant community edition

- Cons: Steep learning curve, basic scheduling and monitoring

The Pentaho suite includes components for a wide range of data needs – ETL capabilities via Pentaho Data Integration (PDI), analytics and reporting via Pentaho Business Analytics, dashboards and data visualization via Pentaho User Consoles, and orchestration through its workflow engine.

As an open source solution, Pentaho offers flexibility to extend functionality via plugins and community support. The ETL tool provides connectivity to a diverse set of applications and databases. The drag-and-drop interface helps with mapping fields and transformations.

While the community edition of Pentaho is very popular, the enterprise edition unlocks advanced features, support and management. Companies looking for a full-featured open source solution benefit from the extensibility, transparency and large community around Pentaho.

Key Features

- Community and enterprise editions for data needs

- Drag-and-drop ETL with diverse connectivity

- Broad set of analytics, reporting, monitoring features

- Orchestration of jobs via workflow engine

- Extensible via plugins and community ecosystem

Why You Should Consider

Pentaho provides a flexible, extensible open source solution covering the complete data integration and analytics lifecycle.

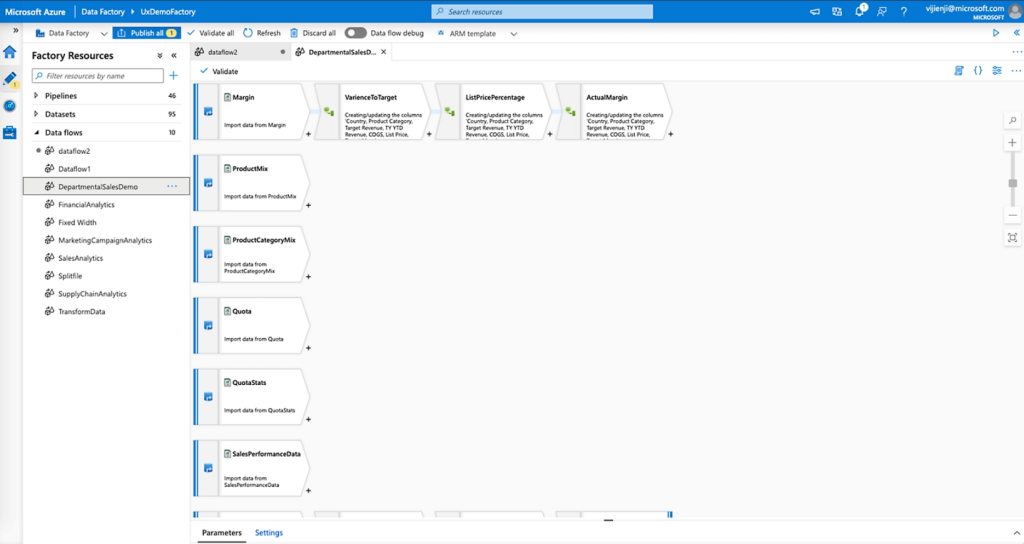

10. Azure Data Factory

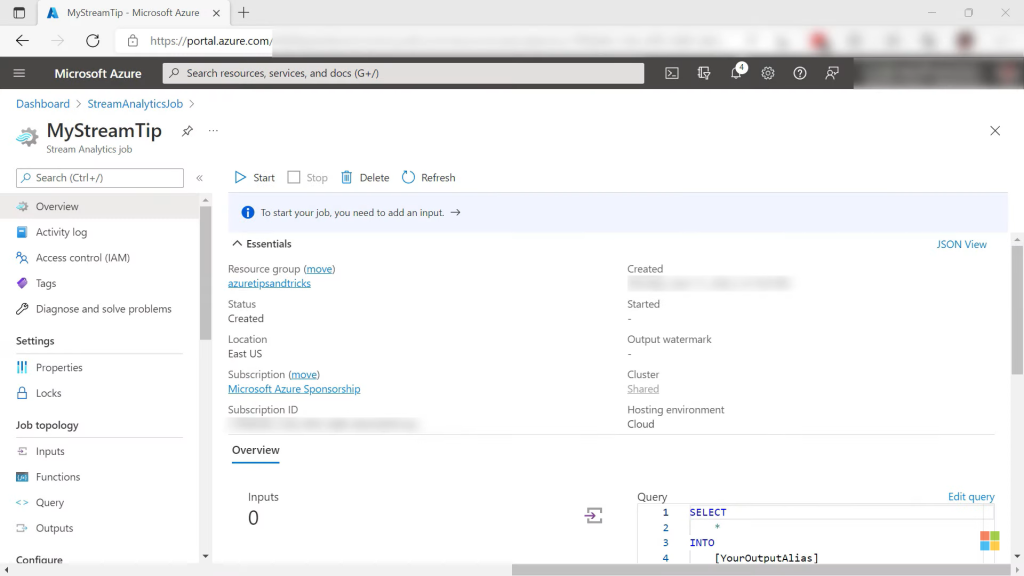

Azure Data Factory is a cloud-based data integration service to orchestrate and automate data movement and transformation.

- Key Features: Visual workflows, SSIS integration, data flow debugging, integration runtimes

- Price: Pay per activity run pricing. ~$50/month for basic usage.

- Reviews & Ratings: 4.4/5 (G2 Crowd)

- Pros: Tight Azure integration, visual pipelines, enterprise-grade

- Cons: Steep initial learning curve

Azure Data Factory makes it possible to create data-driven workflows in the cloud for orchestrating data movement between disparate data stores and transforming data using compute services like Azure Databricks and SQL Azure.

The visual interface allows constructing pipelines via drag-drop. SSIS integration lets reuse existing packages while taking advantage of ADF’s capabilities. Built-in Git integration provides version control. Monitoring tools allow tracking pipeline runs and data flows for errors.

Azure Data Factory integrates tightly with other Azure services like Data Lake Storage, SQL Data Warehouse, etc. The integration runtime can be extended on-premises for hybrid data pipelines. While it requires learning Azure services, ADF provides enterprise-grade orchestration and automation for analytics use cases on Azure.

Key Features

- Graphical construction of pipelines for flow orchestration

- Integration with SSIS for reusing legacy workflows

- Debugging and monitoring of data flows

- Connectors for 85+ data sources

- Integrated with Azure storage, compute and analytics services

Why You Should Consider

Azure Data Factory simplifies creating and managing data integration workflows in Azure. Its scalable architecture makes it suitable for complex enterprise use cases.

FAQs

Here are some commonly asked questions about ETL tools:

What is the difference between ELT and ETL?

ETL (Extract, Transform, Load) involves first extracting data, then transforming it, and finally loading it into the target system.

ELT (Extract, Load, Transform) works by first extracting the data, loading it into the target system, and then transforming it there.

ETL transformation happens outside the target system while ELT transforms inside it. ELT can leverage the processing power of the target system e.g. a database for transformations.

Can ETL tools handle real-time data?

Most ETL tools today can handle batch as well as real-time or streaming data. They provide capabilities to build pipelines that can capture change data and replicate it continuously between systems with low latency.

Solutions like Kafka connect, Spark Streaming, etc. have made real-time data integrations easier to implement alongside traditional ETL batch workflows.

Do ETL tools require coding?

Most ETL tools provide a visual interface for building pipelines dragging and dropping components without writing code. Some simpler tools require no coding at all.

However, ETL tools do allow custom code snippets to be added at various points using languages like Python, Java, etc. This helps handle complex logic that goes beyond basic transforms.

So some coding knowledge can be useful but is generally not mandatory for full utilization.

Are there open-source ETL tools?

Yes, there are many popular open source ETL tools available including:

- Apache Airflow: Workflow management platform

- Talend: Broad set of data integration tools

- Pentaho: Data integration and analytics suite

- CloverETL: ETL environment for moving and transforming data

Benefits of open source ETL tools include no license cost and community-driven development. Most open source ETL tools also offer commercial editions with professional support.

Conclusion

ETL tools provide the critical backbone enabling enterprises to harness data from diverse systems and empower analytics-driven decision making. As data environments get more complex, choosing the right ETL tool is an important factor in the success of data integration initiatives.

This guide covered the spectrum of ETL tools available today ranging from free open source to full-featured commercial products. Cloud data integration services are also gaining traction with their ease of use.

When evaluating options, consider both current and future needs around scale, performance, integrations required, data quality, ease of use, total cost of ownership, etc. Leverage trial versions and proofs of concept during assessment before taking the final decision.

The ETL landscape will continue evolving alongside data technologies. But a robust data integration platform will remain foundational for organizations aiming to derive value from data through deeper analysis.